Introduction

Recent years have seen a lot of interest in cloud computing. In general, both experts and the media are highly enthusiastic about the prospects that cloud computing provides. The cost benefits of cloud computing were predicted by Merrill Lynch to be three to five times greater for commercial applications and more than five times greater for consumer apps in May 2008. In a press release dated June 2008, Gartner predicted that cloud computing would have "no less of an impact than e-business" (Gartner 2008a). The definition of cloud computing remains, to some degree, debated, with some denying its existence or stating it is a meaningless term. Others draw attention to the technology's resemblance to more established or outdated technologies, such as client-server architecture, a distributed paradigm composed of "servers" that offer material and "clients" that make content requests and display them. According to a technical study by the Reliable Adaptive Distributed Systems Laboratory (RAD Labs) at UC Berkeley, there are three aspects that set cloud computing unique from conventional business models:

- The delusion that endless computational power is readily available.

- Cloud users no longer need to make an upfront commitment.

- The capacity to pay for the temporary use of computing resources as required.

The National Institute of Standards and Technology (NIST) has developed a definition of cloud computing as a model for enabling universal, practical, on-demand network access to a shared pool of reconfigurable computing resources (such as networks, servers, storage, applications, and services) that can be swiftly provisioned and released with little management effort or service provider interaction. The distribution of hosted services via the Internet is a more straightforward definition of cloud computing. Content is sourced from sources other than the user's own computing equipment, which could be a desktop computer, laptop, smart phone, or tablet. The movement from here, where data are on a local desktop or server within one's organizational responsibility, to there, where data have relocated somewhere not immediately under supervision, is also implied by this straightforward definition. This movement describes most (if not all) of cloud computing.

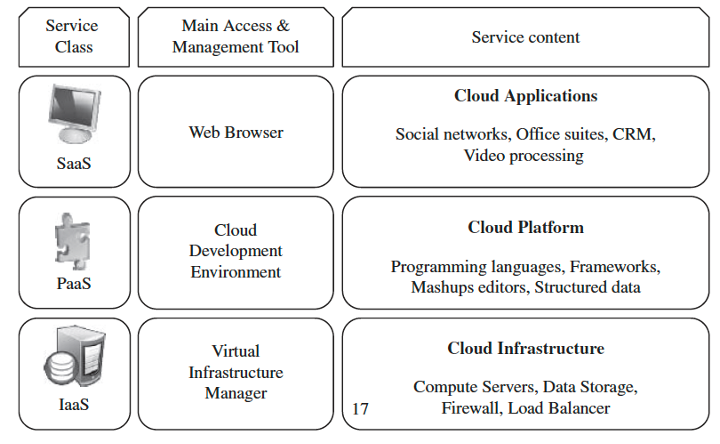

The use of cloud computing expands inter-networking technology views and creates new challenges for the architecture, design, and implementation of current networks and data centers. The pertinent research has just lately picked up steam, and there are still many unanswered questions as well as brand-new problems that need to be investigated. Applications that are created on top of services are typically the foundation of cloud computing solutions. Services are provided over the network by providers who are not connected to the customers through standard techniques like web services. These providers create applications as needed by combining service mashups, which may contain heterogeneous platforms with well-defined behavior. Platform as a Service (PaaS), Infrastructure as a Service (IaaS), and Software as a Service (SaaS) are the three broad categories into which cloud service providers can be divided.

SaaS attempts to give application developers access to services (or even whole apps), which are hosted on the provider's cloud infrastructure. Applications are accessible through regular Web services, to develop programmed apps, or by using web browsers, to build applications. The user cannot control or even see the underlying infrastructures.

PaaS allows users to launch finished apps on cloud infrastructure without having to manage it. The suppliers provide libraries and tools in order to deploy the application itself, as opposed to providing services. On the virtual platform, applications are executed transparently in a manner akin to the Google Apps Engine and Morph Labs.

IaaS refers to the explicit provisioning of computing, data storage, and networking resources as services via virtualized machines utilizing the infrastructure of the provider. Users in this situation are fully aware of the virtual infrastructure and are responsible for maintaining it. With a multi-tenant approach, the provider's computer resources are combined to service several customers, with distinct physical and virtual resources being dynamically assigned and reassigned in response to customer demand.

The benefits of cloud computing according to AWS include:

- Agility: You can easily access a wide variety of technologies thanks to the cloud, which allows you to innovate more quickly and create almost anything you can think of. You may instantly spin up resources as you require them, including Internet of Things, machine learning, data lakes, analytics, and infrastructure services like computation, storage, and databases. Technology services may be deployed quickly, allowing you to move from idea to implementation much more quickly than in the past. This allows you the flexibility to try new things, test novel customer experience concepts, and reinvent your company.

- Elasticity: With cloud computing, you can handle future peaks in business activity without having to over-provision resources now. As an alternative, you only provision the resources that you truly require. As your company's demands change, you may scale these resources up or down to immediately increase and decrease capacity.

- Cost savings: With the cloud, you can swap out fixed costs (such data centers and physical servers) for variable costs and only pay for the IT you actually use. Also, because of the economies of scale, the variable costs are considerably cheaper than what you would spend to do it yourself.

- Deploy globally in minutes: Using the cloud, you may quickly deploy globally and grow to new geographic areas. For instance, AWS offers infrastructure all over the world, allowing you to quickly deploy your application in several physical locations. Applications that are located nearer to end users have lower latency and provide better user experiences.

History of Cloud Computing

Defense Advanced Research Projects Agency (DARPA) gave MIT $2 million in 1963 for Project MAC. A condition of the financing was that MIT create technology that would enable "two or more individuals to utilize a computer concurrently." In this instance, one of those enormous, antiquated computers that used reels of magnetic tape as memory served as the forerunner to what is now often referred to as cloud computing. It functioned as a primitive cloud that could be accessed by two or three people. This circumstance was referred to as "virtualization," albeit the meaning of the term was later expanded.

J. C. R. Licklider contributed to the creation of the "very" early version of the Internet known as the ARPANET (Advanced Research Projects Agency Network) in 1969. JCR, also known as "Lick," was a computer scientist and psychologist who advocated for an idea known as the "Intergalactic Computer Network" in which everyone on Earth would be connected to computers and have access to information from anywhere. (What might such an impractical, unaffordable, futuristic fantasy look like?) Access to the cloud requires the Intergalactic Computer Network, commonly known as the internet. The definition of virtualization started to change in the 1970s, and it is today used to refer to the building of a virtual machine that functions exactly like an actual computer. With the advent of the internet, firms started renting out "virtual" private networks, evolving the idea of virtualization. Throughout the 1990s, the usage of virtual computers gained popularity, which prompted the creation of the current cloud computing infrastructure.

Telecommunications firms began supplying virtualized private network connections in the 1990s. Historically, only single dedicated point-to-point data connections were available from telecommunications providers. The newly available virtualized private network connections were less expensive and provided the same level of service as their dedicated counterparts. Telecommunications firms might now provide consumers shared access to the same physical infrastructure rather than expanding the physical infrastructure to enable more users to have their own connections. The development of cloud computing is concisely explained by the list below:

- Grid computing: Using parallel computing to solve huge issues.

- Utility computing: Providing computing resources as a metered service.

- SaaS: Network-based application subscription services.

- Cloud computing: Dynamically provided IT resources accessible from anywhere at any time.

Amazon debuted its web-based shopping services in 2002. It was the first significant company to consider employing barely 10% of its capacity, which was typical at the time, as a challenge. They were able to utilize their computer's capability far more effectively thanks to the cloud computing infrastructure paradigm. Other major businesses quickly adopted their strategy. Amazon introduced Amazon Web Services in 2006, which provides online services to other websites or customers. Among the cloud-based services offered by one of Amazon Web Services' websites, Amazon Mechanical Turk, are "human intelligence," compute, and storage. Elastic Compute Cloud (EC2) is another website run by Amazon Web Services that enables users to rent virtual computers and run their own software.

In support of Smarter Planet, IBM unveiled the IBM Smart Cloud framework in 2011. (a cultural thinking project). Later Apple introduced the iCloud, which aims to store more private data (photos, music, videos, etc.). Also, Microsoft started advertising the cloud on television this year, educating the public about its capacity to store images or videos with simple access.

Oracle introduced the Oracle Cloud in 2012, offering the three basics for business, IaaS (Infrastructure-as-a-Service), PaaS (Platform-as-a-Service), and SaaS (Software-as-a-Service). These "basics" swiftly established themselves as the norm, with some public clouds providing all of them while others concentrated on providing just one. Software as a service gained a lot of traction.

App developers are currently one of the main consumers of cloud services. From being developer-friendly to developer-driven, the cloud started to change in 2016. Application developers started utilizing the cloud's tools to their maximum potential. To attract more clients, a lot of services work to be developer friendly. Cloud suppliers created (and still create) the tools that app developers desire and need after realizing the necessity and the potential for profit.

The narrative is not yet complete in this instance. Cloud computing is still in its infancy.

Cloud Computing Stack

Accordingly, cloud computing services are classified into three classes, the level of abstraction of the functionality provided and the service model of the provider,

ie:

- Infrastructure as a Service,

- Platform as a Service,

- Software as a service.

- Figure 1.1 shows the layered structure of the cloud stack.

From physical infrastructure to applications.

Amazon Web Services, for instance mainly offers IaaS, which in the case of its EC2 service means offering VMs with a software stack that can be customized similar to how an ordinary physical server would be customized. Users are given privileges to perform numerous activities to the server, such as: starting and stopping it, customizing it by installing software packages, attaching virtual disks to it, and configuring access permissions and firewalls rules.

|

| Figure 1.1 |

The above diagram shows how the cloud stack from physical infrastructure to applications is organized.

- Infrastructure as a Service

Offering virtualized resources (computation, storage, and communication) on demand is known as Infrastructure as a Service (IaaS). Amazon Web Services mainly offers IaaS, which in the case of its EC2 service means offering VMs with a software stack that can be customized similar to how an ordinary physical server would be customized. Users are given privileges to perform numerous activities to the server, such as: starting and stopping it, customizing it by installing software packages, attaching virtual disks to it, and configuring access permissions and firewalls rules.

IaaS, or infrastructure as a service, is a cloud computing utility that offers pay-as-you-go access to basic compute, storage, and network resources. Software-as-a-service (SaaS), platform-as-a-service (PaaS), serverless computing, and IaaS are the other three kinds of cloud services.

You can cut down on hardware costs, reduce on-premises data center maintenance, and gain real-time business insights by moving your company infrastructure to an IaaS solution. IaaS solutions offer you the freedom to adjust the size of your IT resources as necessary. Additionally, they increase the dependability of the underlying infrastructure and aid in the quick deployment of new apps. With IaaS, you can bypass the costs and complexities associated with purchasing and managing physical servers and data center infrastructure. Each resource is offered as a separate service component, and you only pay for a given resource for as long as you need it. A cloud computing service provider like Azure manages the infrastructure while you purchase, install, configure, and manage your own software—including operating systems, middleware, and applications.

- Platform as a Service

Platform as a Service is a strategy that offers a higher level of abstraction to make a cloud readily programmable in addition to infrastructure-oriented clouds that provide basic computing and storage services (PaaS). Developers can build and launch applications on a cloud platform without necessarily needing to know how many processors or how much memory those applications will use. Also available as building blocks for new apps are a variety of programming models and specialized services (such as data access, authentication, and payments).

Platform-as-a-Service (PaaS) is a full-featured environment for cloud creation and deployment that offers tools for creating both basic cloud-based apps and complex enterprise applications. You obtain the required resources from a cloud service provider using a pay-as-you-go payment model and access them via a secure internet connection.

In addition to infrastructure elements like servers, storage, and networking technology, PaaS also contains middleware, development tools, BI (business intelligence) services, database management systems, and other things. Web application development, testing, deployment, management, and updating are all supported by PaaS.

Eliminate the expense and complication of purchasing and managing resources with PaaS, such as software licenses, middleware, container orchestrators like Kubernetes, or development tools.

- Software as a Service

At the top of the cloud stack SaaS resides. End consumers can reach the services of this layer offers via Web portals. Because online software services give the same functionality as locally installed computer programs, consumers are increasingly switching from them. Today, the Web offers traditional desktop programs like word editing and spreadsheets as a service. Software as a Service (SaaS) delivery models ease the burden of software upkeep for users while streamlining development and testing for service providers.

SaaS (Software-as-a-Service) allows users to connect to and use cloud-based apps over the Internet. Basic examples are email, calendar, and office tools (e.g. Microsoft Office 365).

With a pay-as-you-go payment model, SaaS provides an entire software solution that you can buy from a cloud service provider. To use an app at your business, you must pay a fee. After that, users join online (usually with a web browser). The service provider's data center houses the app's data as well as all of the app's supporting hardware, middleware, and software. Through the proper service contract, the service provider controls the hardware and software and guarantees the app's accessibility and security as well as the privacy of your data. Your company can rapidly launch an app using SaaS with little up-front cost.

|

| Figure 1.2 cloud stack services overview |

Cloud Providers

- Amazon – Most commonly used Cloud Solutions provider

AWS was introduced in 2006 to provide developers with on-demand IT infrastructure. From the beginning, the focus was primarily on the enterprise and less on the end user. To operate its e-commerce platform, the company needed globally distributed data centres and highly available services, as well as interfaces to other applications available to third parties through Amazon Web Services.

Servers

EC2 (Elastic Compute Cloud) is a virtual server running a Linux distribution or Microsoft Windows Server. Billed by the hour or second, with no fixed contract term. You can choose from multiple tiers based on available memory and compute units that support virtual processors. VM instances are not persistent compared to other providers. This means that the memory contents are cleared when the instance is stopped.

Memory

S3 (Simple Storage Service) is a file hosting service that can theoretically store any amount of data and is billed for usage. Access is via HTTP/HTTPS. Amazon pioneered the concept of buckets and objects, which are similar to directories and files, and have become standards that other vendors emulate. Amazon also offers network drives using Elastic File System and archiving files using Glacier. Amazon promises 99.999999999 percent durability for your data. Amazon Elastic Block Store (EBS) provides block-level storage that you can attach to your Amazon EC2 instances.

To facilitate the transfer of large amounts of data, AWS offers hard disk storage that can be rented with the Snowball service. You can copy large amounts of data to this storage and send it back by parcel service. This makes transferring very large amounts of data (hundreds of terabytes to petabytes) to the cloud much faster.

Network

CloudFront is a content delivery network that enables you to globally distribute your content from other AWS services such as EC2 and S3 to reduce access times. This service is limited to the HTTP(S) protocol and is charged per gigabyte per region accessed. Depending on your configuration, individual files or entire domains can be served via CloudFront and optionally encrypted via SSL. Amazon also operates a domain name service under the name Route 53, which allows conversion between IP addresses and domains. Billing is based on zone used.

- Microsoft Azure

Cloud computing providers offer network-based applications and databases. Your own files are on the provider's server, not on your computer. Microsoft wants to focus more on Internet-based services and wants to combat the consumer trend toward cheaper, less powerful computers such as netbooks. An interesting side effect for manufacturers is that there is no need to distribute software to end users, which greatly reduces piracy issues. This offer is intended to represent a distinct change in course at Microsoft. It competes with comparable products such as Google App Engine and Amazon's Elastic Compute Cloud.

Microsoft Azure represents the main part of the newly developed platform, the Microsoft Azure Platform. The platform offers users new services such as databases and new versions of the .NET Framework. Additionally, a data synchronization service based on the Microsoft Windows SharePoint Services system is provided.

- Google cloud

Google Cloud Platform (GCP), offered by Google, is a set of cloud computing services that run on the same infrastructure that Google itself uses internally for end-user products such as Google Search and YouTube. . In addition to some management tools, we also offer various modular cloud services such as compute, data storage, data analytics, and machine learning. Registration requires credit card or bank details.

Google Cloud Platform offers infrastructure as a service (IaaS), platform as a service (PaaS), and serverless computing environments.

In April 2008, Google announced App Engine, a platform for developing and hosting his web applications in Google-managed data centres. This is the company's first cloud computing service. This service was made generally available in November 2011. Since the launch of App Engine, Google has added several cloud services to the platform.

Google Cloud Platform is part of Google Cloud and includes the Google Cloud Platform public cloud infrastructure, Google Workspace, enterprise versions of Android and Chrome OS, machine learning programming interfaces, and enterprise mapping services.

Remote Patient Monitoring System using IOT or other systems in Cloud

Prototype Overview

In this architecture, we start by publishing data to AWS IoT core which integrates with Amazon Kinesis allowing us to collect process and analyze large bandwidth of data in real time. Data can be visualized using Amazon QuickSight.The figure 1.3 shows the overview of the design which can be implemented in our patient monitoring system.

Metrics & Analytics:

Amazon Kinesis Data Analytics allows you gain actionable insights from streaming data. With Amazon Kinesis Data Analytics for Apache Flink, customers can use Java, Scala, or SQL to process and analyze streaming data. The service enables you to author and run code against streaming sources to perform time-series analytics, feed real-time dashboards, and create real-time metrics.

Reporting and Monitoring:

For reporting we can use Amazon QuickSight for batch and scheduled dashboards. If the use-case demands a more real-time dashboard capability, users can use Amazon OpenSearch with OpenSearch Dashboards.

Amazon QuickSight is a machine learning-based business intelligence service built for the cloud under Amazon Web Services. This enables businesses to make smarter, data-driven decisions.

Amazon Timestream is a fast, scalable, and serverless time series database service that makes storing and analyzing trillions of events per day up to 1,000 times faster. Amazon Timestream automatically scales up or down to match capacity and performance, so you don't have to manage the underlying infrastructure.

Different Monitoring and alerting devices can utilize and integrate the data stored in S3 and Amazon Timestream.

If the application involves high bandwidth streaming datapoints, then this design provides the ability to analyze that high bandwidth and real-time steaming data so that we can derive actionable insights.

|

| Figure 1.3 prototype |

Here data is generated from an IoT device to provide insights about patient, similarly we can integrate any other devices, system or even software’s based on Artificial intelligence to stream and monitor real time.

Artificial Intelligence in Cloud

- Applying Artificial Intelligence to streamlining cloud solutions

- Artificial intelligence (AI) technology refers to mimicking human intelligence with limited programming to think and act like Artificial intelligence technology like deep learning, is also associated with the human mind, including problem solving. AI offers cutting edge technology for predicting accurate results in a cloud environment.

- Artificial intelligence technology has become essential for enhancing the functionality of cloud Cloud applications are employed in a cloud environment rather than being hosted on a local computer or server. In the future, artificial intelligence (AI) could effectively change the performance of cloud applications, transforming both marketing strategies, organizational workflows, and customer behavior. Enterprises can use artificial intelligence to optimize the cloud computing applications they need for their corporate servers and machines.

- AI technology benefits organizations in speech, image processing, text analysis, and machine Unlike hard drives and other local storage devices that require IT administration skills, software patching, hardware setup and stacking, and racking, cloud applications combined with AI technology make applications internet-based.

- In addition, AI technology's ability to see and collect information from various locations can help organizations develop effective security measures to respond to security AI, on the other hand, can help human security teams recognize their role as essential and successfully perform their task of advancing organizational security.

- Artificial Intelligence and its potential in HealthCare

Artificial Intelligence (AI) and related technologies are becoming more and more prevalent in business and society and are gradually being used in healthcare. These technologies have the potential to transform many aspects of patient care and management processes within healthcare providers, payers, and pharmaceutical companies.

There are already numerous research studies suggesting that AI can perform as well or better than humans on critical health tasks such as diagnosing disease. Algorithms are already outperforming radiologists in detecting malignancies and guiding researchers in assembling cohorts for costly clinical trials. However, for a variety of reasons, we believe it will be many years before AI replaces humans in a wide range of medical process areas. This article discusses both the potential for automating aspects of AI-delivered care and some barriers to the rapid implementation of AI in healthcare.

Amazon Timestream Database for IOT, Sensor and other time series data

Timestream saves time and cost managing the lifecycle of time-series data by keeping current data in storage and moving historical data to a cost-optimized storage tier based on user-defined policies. This component allows us to manage stored patient’s historic data generated by IoT applications using built in analytics. Below architecture can be used in IoT applications. Timestream or other time series database provides support leveraging time-series data to make better decisions. Other open-source time series database is also available in the market, which can be used depending upon the compatibility of the software stack.

Use cases for remote patient monitoring system

- COVID-19 remote patient monitoring

Since the outbreak of coronavirus disease (COVID-19) began in December 2019, the pandemic has forced health systems around the world to take steps to protect both patients and staff, prepare and adapt to floods. and presents a host of challenges that society must respond to in unprecedented ways and to support patients who are critically ill. Healthcare systems are reporting their initial experiences including establishing safe COVID-19 testing options using drive-through methods, converting to a hospital incident command center structure for rapid prioritization and clear decision making, canceling elective surgeries, shifting or delaying other routine care, and aggressively converting in-person care to virtual options with significant telehealth transformation.

COVID-19 Monitoring Solution

A patient education and remote patient monitoring solution with COVID-19 specific content was configured and deployed (GetWell Loop; GetWellNetwork, Bethesda, MD). The solution included critical steps of enrollment, engagement, monitoring with first responder escalation, and treatment completion. Prior to the COVID-19 outbreak, this platform was being used for enhanced recovery after surgery programs to offer educational materials and to engage patients around monitoring their pain control, diet, and other self-care. The COVID-19 specific program was able to repurpose this tool to handle the rapid influx of patients allowing for escalation protocols to first responders.

The prototype with modifications proposed in this report (discussed in the above sections) can be used to monitor Covid patients remotely and can collect pertinent data for further prediction and prevention of the disease. It will record all the symptoms related to the disease and helps to reduce the fatalities in critical patients.

- Cloud Based ECG Visual Analysis

The leading cause of death worldwide, according to the World Health Organization (WHO), is cardiovascular disease (CVD). A total of 17.5 million deaths in 2020—or 32% of all fatalities worldwide—were attributable to CVD.

Electro-cardiogram (ECG) visual analysis is a common task performed by a healthcare specialist as part of cardiovascular-diseases pre-diagnostic technique. However, when an ECG specialist analyzes long recordings (such as 24-hour Holter), the task becomes more difficult and complicated, increasing the chances of misdiagnosis. The earlier detection of the abnormality provides a more significant chance of recovery for patients that suffer from cardiovascular diseases.

Architecture Model

A front-end and a back-end make up an application. In Fig. 1.8 we can recognize its modules: ECG upload, ECG conversion to image, R peak identification, cardiac cycle window, identification of arrhythmias(irregular heartbeats), labeling, and finally report download. The prototype discussed in this report can be integrated for better accuracy in patient monitoring and reporting system.

Pattern identification in ECG visual data

- There are multiple algorithms for pattern detection or R-peak identification in an electrocardiogram waveform. Stationary Wavelet Transform (SWT please check below figure ), due to its high rate of detection, this technique, whichhas an accuracy rate between 7% and 99.8%, is one of the most widely used algorithms for QRS complexes.

The QRS complex is made up of three of the graphic deflections present on a typical electrocardiogram (ECG or EKG). Below Figure represents a CNN model an artificial intelligence learning method for signal classification and prediction. CNN or ANN is an is an artificial neural network, works with advanced datasets including sensor data and ECG signals. - In Artificial neural networks (CNN), a hidden layer is located between the input and output of the algorithm, in which the function applies weights to the inputs and directs them through an activation function as the output. In short, the hidden layers perform nonlinear transformations of the high dimensional data (video/signals/images) entered into the network. Number of hidden layers depends up on the data and function of the neural networks.

|

| deep learning model |

|

| SWT implmentation |

In order to monitor patients outside of typical clinical settings, such as at home or in a remote location, remote patient monitoring (RPM) system or technology has been developed. This technology may improve access to care and lower the cost of providing healthcare. RPM entails the continuous remote monitoring of patients by their doctors, frequently to track physical symptoms, chronic conditions, or post-hospitalization rehab.

Could and IoT based remote patient monitoring system facilitates visual and linear analysis of medical data, also for timely alerting. Technologies for remote patient monitoring (RPM) have been beneficial throughout the pandemic, and their significance is only increasing. For more individualized and practical care, RPM can be incorporated into various digital health solutions. With the help of artificial intelligence technologies like deep learning, we can also improve the accuracy and prediction of the monitoring and alerting system.

Below is our sample working model for our remote patient monitoring system, Figure represents the sensor data generated by the IoT device, also shows a graphical representation of EKG monitoring.

References

[1] Foote, Keith D. “A Brief History of Cloud Computing - DATAVERSITY.” DATAVERSITY, 17 Dec. 2021, https://www.dataversity.net/brief-history-cloud-computing/#.

[2] “A Brief History of Cloud Computing - Cloud Computing News.” Cloud Computing News, 23 Aug. 2016, https://www.ibm.com/blogs/cloud-computing/2016/08/23/a-brief-history-of-cloud-computing-2/.

[3] J. Dale Prince (2011) Introduction to Cloud Computing, Journal of Electronic Resources in Medical Libraries, 8:4, 449-458, DOI: 10.1080/15424065.2011.626360

[4] “What Is Cloud Computing.” Amazon Web Services, Inc., https://aws.amazon.com/what-is-cloud-computing/.

[5] Cloud Computing Principles and paradigms – John Wiley and Sons, INC

[6] Journal of the American Medical Informatics Association, Volume 27, Issue 8, August 2020 - https://doi.org/10.1093/jamia/ocaa097

[7] https://aws.amazon.com/de - sample reference for all architectural component details/implementations

[8] IoT-enabled cloud-based real-time remote ECG monitoring system - Sahu M, Atulkar M, Ahirwal MK. Comprehensive investigation on IoT based smart HealthCare system. IEEE Int Conf ICPC2T. 2020;8(5):33–37.

[9] Cheng, J., Zou, Q. & Zhao, Y. ECG signal classification based on deep CNN and BiLSTM. BMC Med Inform Decis Mak 21, 365 (2021). https://doi.org/10.1186/s12911-021-01736-y

[10] Real-time QRS detector using stationary wavelet transform for automated ECG analysis.

in: 2017 IEEE 17th international conference on Bioinformatics and Bioengineering (BIBE). IEEE, 2017: 457-461

Great post!

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteYour article provides great insights into the role of cloud and IoT in remote patient monitoring, highlighting their potential to enhance healthcare efficiency. As technology continues to evolve, integrating secure and scalable solutions will be crucial for widespread adoption. On a different note, if you're interested in app development, here’s a useful guide on creating a dating app: https://www.cleveroad.com/industries/healthcare/patient-monitoring/.

ReplyDeletePost a Comment